2022.01.27

An interdisciplinary research team in electrical engineering and life sciences has developed a biomimetic eye, which works in conjunction with AI to teach drones, robots, and self-driving cars to see like insects. Using little more than a low-power chip and a lens, it can quickly identify and track objects and avoid obstacles, making it suitable for such applications as automated search and rescue, unmanned inspection, monitoring, medical care, and agriculture.

The team’s bionic eye has been featured in a number of top international journals, including Nature ElectronicsNature Electronics and the Journal of Solid State CircuitsJournal of Solid State Circuits, and has won the Ministry of Science and Technology’s 2021 Future Tech Award.

The team is headed by Prof. Tang Kea-tiong(鄭桂忠) of the Department of Electrical Engineering. According to Tang, computerized dynamic vision is akin to a motion picture in that it is composed of static images presented in rapid succession; however, the process via which the computer stores each digital image and then compares it with the previous one, is slow, power-hungry, and memory-intensive, making such a device too heavy for use in a drone.

To solve this problem, the team was joined by Prof. Lo Chung-chuan(羅中泉) of the Department of Life Sciences, who has extensive knowledge of the visual and spatial perception of insects. Inspired by the vision of fruit flies and bees, he devised the “optical flow method,” which uses the flow of light to judge the distance and speed of surrounding objects, so that obstacles can be quickly identified and avoided simply by capturing their contours and geometric features.

Prof. Lo explains that the energy consumption of the bionic eye is similar to that of a person walking along the road, simply noticing whatever buildings, signs, people, or parked cars are encountered along the way, which requires much less mental effort than assessing the speed of a rapidly approaching vehicle. Similarly, the bionic eye only pays close attention to moving objects, which both speeds up image processing and reduces power consumption.

Prof. Tang used an algorithm based on the principles of “pruning” and “sparseness” to train the bionic eye to mimic the visual processing of an insect eye, in which the unimportant parts of the calculation weight are set to zero and ignored, so that “the more zeros there are, the faster the calculation becomes, and the less power it consumes.” Another feature of their bionic eye is that it directly performs calculations in the memory, without having to transfer data back and forth between the memory and the CPU, which can reduce power consumption by 90%.

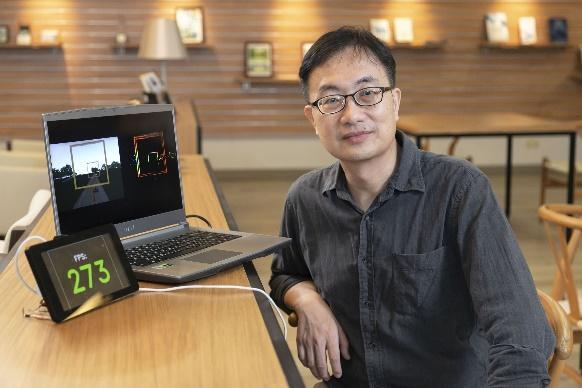

Prof. Hsieh Chih-cheng(謝志成) of the Department of Electrical Engineering was in charge of developing the algorithm used in the sensor, which functions like the type used in surveillance and access control systems, in which a simple lens can differentiate between a person and a dog; once the camera determines that a person is there, it sends the data obtained from the facial characteristics to the computer's recognition system to determine who it is. Since there is no need to analyze all the information of each frame in detail, only a few microwatts of electricity are consumed, which is about one millionth of the electricity consumption of a light bulb.

Associate Prof. Lu Ren-shuo(呂仁碩) of the Department of Electrical Engineering was in charge of designing the neural-like chip framework which integrates the software and hardware. During the design process, the team collaborated with TSMC and other leading companies in IC design to develop a new generation of AI chips. Associate Prof. Lu said that the master chips for bionic drones are currently being developed.

The team was formed about four years ago, but it took some time for the members to become familiar with each other’s fields. For example, whenever Prof. Lo began to explain the intricate workings of the fruit fly’s neural system, the specialists in electrical engineering would always begin by asking “how many bits is that?”

Nonetheless, Prof. Lo’s romantic enthusiasm eventually rubbed off on the practical-minded electrical engineers. The team is now planning on adapting their invention for use in stealth drones and a search-and-rescue device small enough to search for survivors trapped in a collapsed building.

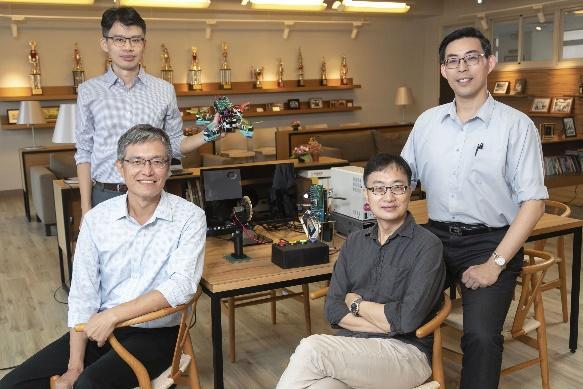

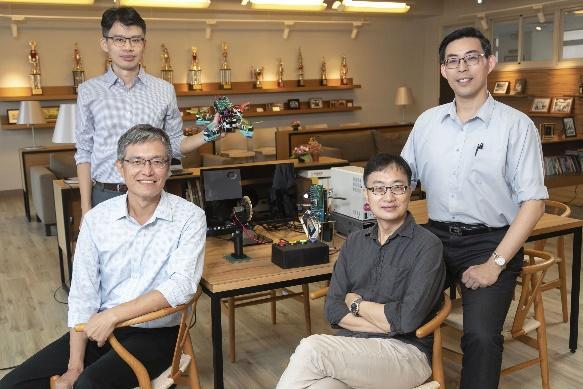

Team members from left to right: Associate Prof. Lu Ren-shuo, (呂仁碩) Prof. Hsieh Chih-cheng(謝志成), Prof. Lo Chung-chuan(羅中泉), and Prof. Tang Kea-tiong(鄭桂忠).

The interdisciplinary research team has developed a biomimetic eye for use in drones, robots, and self-driving cars.

The entire interdisciplinary research team.

Hsieh Chih-cheng (right) developed the algorithm which allows the bionic eye to only pay close attention to moving objects, and Lu Ren-shuo (left) designed the neural-like chip framework which integrates the software and hardware.

The interdisciplinary research team has developed a biomimetic eye for use in drones, robots, and self-driving cars.

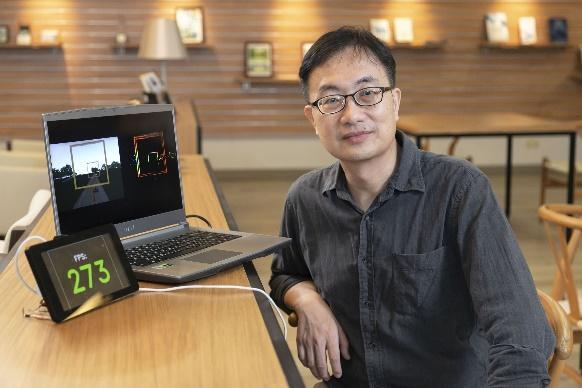

Lo Chung-chuan demonstrating how drones can be taught to see like insects.

Hsieh Chih-cheng developed the algorithm, which allows the bionic eye to only pay close attention to moving objects.

Tang used an algorithm to train the bionic eye to mimic the visual processing of an insect eye.

Lu Ren-shuo was in charge of designing the neural-like chip framework, which integrates the software and hardware.